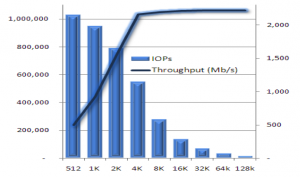

iSCSI is as fast as your hardware can handle. How fast? Try 1,030,000 IOPS over a single 10 Gb Ethernet link!

In March, Microsoft and Intel demonstrated that the combination of Windows Server 2008 R2 and the Xeon 5500 could saturate a 10 GbE, pushing data throughput to wire speed. Today, they showed that this same combination can deliver an astonishing million I/O operations per second, too.

We’ve heard of science experiments dishing out millions of IOPS before. Texas Memory Systems even offers a 5 million IOPS monster! All of these are impressive, to be sure, but they are proof of concept designs for back-end storage, not SAN performance. Actually pulling a million IOPS across a network has required the use of multiple clients and Fibre Channel links.

The Microsoft/Intel demo demanded some creativity to be sure, but they pushed a million IOPS over a single Gigabit Ethernet link using a software initiator. That’s right, this was a single client with a single 10 GbE NIC pulling a million I/O operations from a SAN. Amusingly, it took many storage targets working together to actually service this kind of I/O demand!

The Microsoft/Intel demo demanded some creativity to be sure, but they pushed a million IOPS over a single Gigabit Ethernet link using a software initiator. That’s right, this was a single client with a single 10 GbE NIC pulling a million I/O operations from a SAN. Amusingly, it took many storage targets working together to actually service this kind of I/O demand!

Another thing to note is this was done with the software iSCSI stack in Windows Server 2008 R2, not some crazy iSCSI HBA hardware. Again, an iSCSI HBA would have trouble servicing this kind of I/O load, but an Intel Xeon 5500 has plenty of CPU horsepower to handle the task.

This bests the already-impressive 919,268 IOPS put up by an Emulex FCoE HBA earlier this week. It’s the equivalent of over 5,000 high-end enterprise disk drives and five times greater than the total database I/O operations of eBay.

So what’s the takeaway message? There are a few:

- Performance is not an issue for iSCSI – Sure, not every iSCSI stack can handle a million IOPS, but the protocol is not the problem. iSCSI can saturate a 10 GbE link and deliver all the IOPS you might need.

- Performance is not an issue for software – Today’s CPUs are crazy fast, and optimized software like the Windows Server 2008 R2 TCP/IP and iSCSI stacks can match or exceed the performance of specialized offload hardware.

- Storage vendors need to step up their game – Whose storage array can service a million iSCSI IOPS? Raise your hands, please! I can’t hear you! Hello? Anyone there?

- Fibre Channel and FCoE don’t rule performance – I don’t know of a Fibre Channel SAN that can push this kind of throughput or IOPS through a single link. Even FCoE over the same 10 GbE cable can’t quite do it. If they are to stay relevant, they had better come up with a compelling advantage over iSCSI!

Under The Hood

Looking at the release a bit closer, we see that this high IOPS limit was reached with 512 byte blocks, with 1k and larger blocks delivering much better throughput but decreasing IOPS. Typical FC HBAs can only reach 160,000 IOPS or so, a rate the iSCSI initiator could handle with large blocks and low CPU utilization.

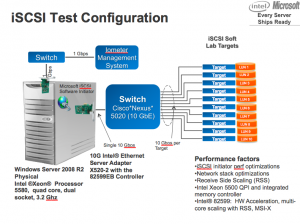

The client side of the test was a quad-core 3.2 GHz Xeon 5580 server running Windows Server 2008 R2. It was connected with an Intel X520-2 10 Gb Ethernet Server Adapter using the 82599EB controller. A Cisco Nexus 5020 switch fanned this out to 10 servers running iSCSI target software. The IOPS measurement was done with the industry-standard IOMeter software.

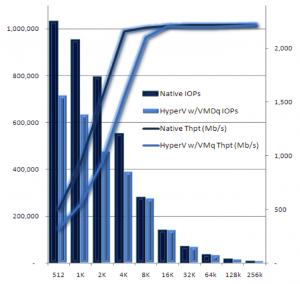

The team also benchmarked this configuration running Microsoft’s Hyper-V server virtualization hypervisor. Since Hyper-V leverages the Windows Server codebase, performance remained remarkably similar. Intel’s VMDq and Microsoft’s VMQ allowed guest operating systems to reach these performance levels, routing ten iSCSI targets to ten guest instances over virtual network links.

Update: Check out this Intel whitepaper on the test!

Dr. Evil/Ballmer mashup image from “The Big Deal“

what’s a “crazy iSCSI HBA”?

I suppose any iSCSI HBA that costs more than a plain old NIC and can’t outperform that NIC plus Microsoft’s software initiator would have to be crazy! One Million IOPS! Hahaha!

1 million IOPS yeah but for 512Bytes! Who formats his disk with such a small allocation unit size? Real world figures are 4KB by default for most of NTFS formatted disks out there, then 8KB for apps like Exchange and 64KB for apps like SQL. And looking under the hood (picture) although iSCSI performs well, it is not the panacea. The 8KB block size does a bit less than 300,000 IOPS with a 10GbE, whilst VMware did 364,000 IOPS with a 8Gb FC. That’s 20% less, same block size but with a bigger pipe… Spot the error!

I am very interested to know if the test environment was using JUMBO frames or not.

Hey Didier: the VMware 340k test used more than 1-8G port. The 300k iSCSI IOPs at 8k was line rate on a single port. Line rate is line rate and there is no way that a single 8FC port can push more IOPs than a single line rate 10G port. And as you hint, the 1 million number was for the headline, but we did provide all block sizes

it did not use Jumbo frames. For large I/O that would have provided another 10-20% boost in CPU effeciency. The benefits of jumbo frames decreases and the servers get faster and faster

Does anyone know as to what the iSCSI target(Microsoft, IET ?) was or what benchmark tool was used to capture these numbers?

Also, they have not revealled the vendor of 10Gb nic. Was that also from Intel?

According to this Intel whitepaper (notes on bottom of last page), the test was performed with Intel’s awesome 82599 10 Gb server NICs and a StarWind 4.0 software iSCSI target.

very nice, but what does it solve? Hardware people like talking about their dick, software people like talking about the person itself.

It is about the person and what he will mean for you not the performance of separate parts.