I’ve been talking about storage capacity utilization for my entire career, but the storage industry doesn’t seem to be getting anywhere. Every year or so, a new study is performed showing that half of storage capacity in the data center is unused. And every time there is a predictable (and poorly thought through) “networked storage is a waste of time” response.

The good news is that this is no longer a technical problem: Modern virtualized and networked servers ought to have decent utilization of storage capacity, and technology is improving all the time. Consider the compounded impact of modern technology on storage capacity utilization:

- Shared storage (SAN and NAS) allows different servers to share a common pool of storage, reducing the likelihood that excess capacity will be stranded in isolated “puddles”. Pervasive use of NAS technology, and the rise of simple and inexpensive iSCSI SANs, means that every system in the modern data center can use shared storage.

- Organizational and architectural optimization allows storage to be provisioned from a common pool rather than building “stovepipe systems” with their own resources. Quicker provisioning also helps reduce over-provisioning.

- Network connectivity allows servers to share resources, including storage, on a peer-to-peer or client-server basis, ultimately resulting in things like cloud computing.

- Managed and utility services reduce the impact of low utilization, potentially focusing on efficiency or perhaps passing the buck to a service provider.

- Thin provisioning might help certain systems to keep less storage in reserve.

So why don’t things get better? It’s hard to be sure why people don’t use these pervasive tools to improve storage utilization, but I do have some ideas…

- Storage utilization might not be a priority. Utilization isn’t often in the critical path of performance or availability, so overtaxed IT departments aren’t going to focus on it.

- Incentives can be lacking. With the cost of storage constantly falling, the effort required to improve the efficiency of already-allocated storage can be just as easily spent migrating to a newer, cheaper storage platform.

- Virtualization has perversely harmed the efficiency of allocation. One might think that the ease and flexibility of virtual disks would improve things, but it hasn’t. Server and storage virtualization just adds another place to hide unused storage.

- Metrics remain a problem, since everyone gets all balled up trying even to talk about capacity utilization.

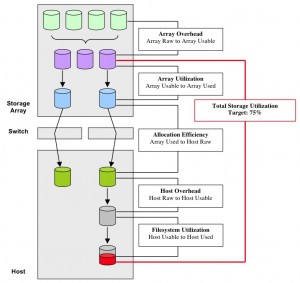

I think this last point is something we in the industry really ought to do something about. We say “utilization” but what do we mean? Chris Evans has proposed a set of metrics for the “storage waterfall“, and I mentioned back in October that this all boils down to three key metrics: Raw, usable, and used. The key question is where to apply them!

Way back before the 2001 bubble-burst, I managed professional services for a company called StorageNetworks. At that time, I was quite aggressive in pushing this same idea, even co-writing a whitepaper on the topic titled Measuring and Improving Storage Utilization. My co-author (Jonathan Lunt) and I recently reminisced about that paper, and we both agreed that everything in it still stands today, apart from the high dollar cost per gigabyte.

I suggest that the following key storage utilization ratios (taken directly from this paper) make just as much sense today as they did then:

- Array Overhead is the percentage of installed storage capacity that is not usable. Dividing Array Usable by Array Raw and subtracting that number from 100% yields the percent of overhead. Overhead here is usually due to the desired level of data protection (e.g. RAID, mirroring) rather than to poor management.

- Array Utilization is the percentage of usable array capacity that is allocated to hosts. It indicates the efficiency of storage deployment operations.

- Allocation Efficiency reflects the ratio of storage presented or allocated to hosts to the amount actually seen by them. In many mature environments this ratio is near 100% (i.e. all the storage allocated is being seen), but this ratio can be extremely difficult to determine. It relies on accurate measurements of both Array Used storage and Host Raw.

- Host Overhead reflects the amount of storage configured for use versus the amount the host can see. Since the Host Raw metric is a function of the storage administration team and the Host Usable a function of the systems administration team, this metric is a useful measurement of how well the two functions are cooperating. Data for this classification is collected from the host.

- File System Utilization is the amount of available file system space that actually contains data. File system utilization is familiar to most systems administrators. This metric is often shown in simple system commands like “df” on UNIX or “dir” on Windows. Data for this classification is collected from the host.

- Total Storage Utilization summarizes how well a company manages its storage assets across the entire business. This ratio is the default storage utilization metric used in publications and reflects the actual value an enterprise is deriving from its storage asset. Care is required in calculating this ratio to ensure that it accurately indicates utilization of the storage environment. Since the result of this ratio is often used in business cases and receives wide attention, it must be both logical and defendable.

To these, I would add another intermediate and optional set of virtualization metrics and ratios for environments with storage or server virtualization. One could also presumably add a higher-level set of application efficiency ratios as well.

In the paper, Jon and I also proposed three best practices to improve storage utilization:

- Drive Array Utilization (Array Usable to Array Used) to greater than 90% (a storage administration responsibility)

- Drive Allocation Efficiency: Bring Host Usable to be as close to Array Used as possible (a joint responsibility)

- Drive Filesystem Utilization (“Host Usable to Host Used”) above 80% (a systems administration responsibility)

Go read the paper and let me know what you think. Are we still stuck in 2001?

This post can also be found on Gestalt IT: Storage Utilization Remains at 2001 Levels: Low!

The issue of storage utilization is more than just how capacity is deployed, but whether the storage system itself has the performance to support the stored data. Often, slowdowns can occur on traditional storage systems, even if the device is not near full listed capacity. In our eight years of shipping product, the experience of customers calling us for help when they have exceeded 90% utilization is not uncommon, as our architecture enables customers to keep performance at peak even as capacity increases.

Stephen, I like Chris’s waterfall better because its less abstract. For instance calling it RAID loss is more to the point than saying array overhead.

You know I have to talk about thin provisioning – something you seem to have the greatest reluctance in accepting, even though you did include it in your list of technologies. The fact is, there is a huge amount of efficiency to be gained by using it. The key storage ratios of Array Utilization, Allocation Efficiency and Host Overhead are all optimized for highest utilization by Thin Provisioning. TP doesn’t use the large, raw storage chunks that get wasted by “less than best practices”. Wasted storage is most effectively addressed by a good TP implementation.

I think you’d be helping more people deal with their capacity utilization problems by saying there are certain environments where you might choose not to use thin provisioning – instead of giving it the sisterly kiss that you did.

This is very true – not every system can handle high utilization. This includes both arrays and servers/filesystems, of course. Remember the old UNIX standard of reserving 10% of the filesystem for root use only? One should not expect to max out effective utilization to 100%, but hopefully most systems can handle more than 80%!

But there’s a lot more than RAID loss implied in the difference between raw and usable. It could be LOTS of things, including RAID, but also certainly including hot spares, OS, cache write reserve, and perhaps even “required free space” as alluded to by Lewis above. Anything that impacts usable capacity could go here, and there’s precious little anyone can do to optimize it away. So one shouldn’t be penalized for maintaining required overhead. Again, this can happen both at the array and server level, and in virtualization systems and applications as well. We can never get to 100% utilization for most metrics.

As for thin provisioning, I did say “Thin provisioning might help certain systems to keep less storage in reserve” which amounts to a great big hug coming from a skeptic like me! Give me time and prove it to me! Hahaha

Right, RAID, spares, cache reserve, private areas, req’d free space – yes. An acronym: RSCRPARFS!

Did you say we shouldn’t penalize people for maintaining required overhead? Maybe I missed that before, but are you advocating a judgment thing here – maybe like reality TV, but for storage admins. Something like, the biggest non-loser, or the least wasted?

Yes. Even earlier than 2001, in my opinion. I blogged on this (http://blogs.netapp.com/shadeofblue/2008/09/elf-wealth-and.html) to point out that various technologies, deduplication amngst them, makes a nonsense of counting real bytes. With dedupe, you can double, teble, quadruple count; for instane, packing 10TB of VMs into 1TB of disk is possible.

How do we account for that?

Yes. Even earlier than 2001, in my opinion. I blogged on this (http://blogs.netapp.com/shadeofblue/2008/09/elf…) to point out that various technologies, deduplication amngst them, makes a nonsense of counting real bytes. With dedupe, you can double, teble, quadruple count; for instane, packing 10TB of VMs into 1TB of disk is possible.

How do we account for that?