I’m a storage connectivity nerd, but I often learn new things I missed. Reading about the new Tyan servers with AMD’s Epyc server processor, I was surprised by an off-hand mention of “OCuLink” as a storage expansion port. Naturally, I did some digging and discovered what it is: OCuLink is a competitor for Thunderbolt for cabled PCI Express connectivity, offering similar performance to Thunderbolt 3 in a different form factor.

Zero to Hero: Thunderbolt

I was an early enthusiast for Thunderbolt (originally called Light Peak), since it opened up a whole new world of I/O performance. And, being a storage nerd, I was particularly keen on the combination of fast PCIe connectivity and flash storage peripherals. Although the original Thunderbolt fell short of expectations (being mainly Mac and copper, not a universal PCIe-over-fiber dream), later revisions have matured nicely.

Today’s Thunderbolt 3 boasts 40 Gbps of PCIe 3.0 bandwidth along with up to 100 Watts of power delivery and support for USB, DisplayPort, and HDMI protocols besides. Although interoperability is more of a nightmare than a dream, Thunderbolt 3 works and is supported on many platforms and peripherals. With Intel and Apple pushing solidly to promote the interface, and leveraging popularity of USB-C, Thunderbolt 3 looks to become a real multi-platform standard.

We are seeing generic motherboards shipping with Thunderbolt 3 onboard, not to mention high-end PC’s and just about every Apple Macintosh computer. It wouldn’t be surprising to start seeing Thunderbolt 3 showing up in server hardware as an interconnect for external PCIe chassis full of NVMe storage or co-processors for machine learning. And Intel is talking about moving Thunderbolt support inside the CPU for the next generation.

So What’s OCuLink?

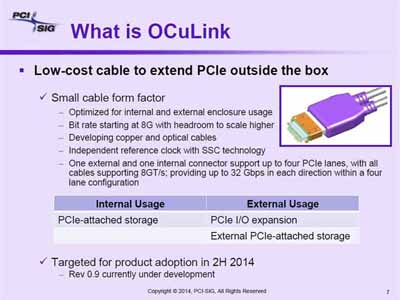

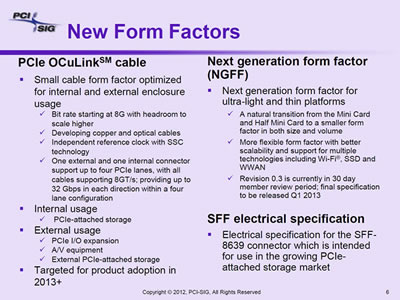

But this wasn’t always a given. And apparently the PCI-SIG (standards body for the PCI interface) thought so too. So in 2012, word started spreading that PCI-SIG was developing a standard cabled protocol for PCIe devices off the motherboard. And this standard would be free and unencumbered by corporate overlords, Apple and Intel.

Thunderbolt had already reached the market by then, but it used Mini DisplayPort connectors and only supported PCIe 2.0, for aggregate throughput of 20 Gbps. In contrast, OCuLink would start with four lanes of PCIe 3.0, good for 32 Gbps of throughput.

Like Thunderbolt, the initial plan was for both optical and copper cables to be used. But both standards hit roadblocks as optical cables failed to materialize in volume. Although there are optical Thunderbolt cables, it’s a far cry from the silicon photonics wonderland promised by Light Peak. And although the “O” in OCuLink stands for “optical”, every implementation I could find uses the “Cu” for copper!

Early reports gushed about OCuLink for laptops, allowing external GPU’s to be connected to thin-and-light PC’s. But this has been a rare application even in the Thunderbolt space (Apple just introduced official support in 2017), and I couldn’t find any OCuLink laptops or GPU enclosures on the market.

Instead, OCuLink was picked up by server developers looking for an in-box PCIe interconnect for storage or I/O virtualization connectivity. And the standard 48-pin connector and cable was sometimes re-purposed to carry multiple 12 Gbps SAS 3.0 channels as an alternative to HDminiSAS, replacing the old 4-channel SFF-8087 mini-SAS connectors we in storage have learned to love.

SFF-8639, SATA Express, and U.2

OCuLink has a kinship to another PCIe interface that might just be more popular. At the same time that the OCuLink cable was introduced, the PCI-SIG also pushed for a drive-attachment interface with PCIe using a connector called SFF-8639. This was initially used for SATA Express drives, a 2-lane PCIe storage interface.

However, in 2015 SFF-8639 was officially renamed “U.2″ for four-lane PCIe storage applications. This has become somewhat more popular. So, in a way, U.2 is a cousin of OCuLink and some devices might even use OCuLink protocol over the U.2 connector!

U.2 drives look like conventional 2.5” SSD’s. So they might take off in servers and datacenter applications. But lately, most PCIe storage implementations are leaning towards the compact M.2 interface instead. And on the pro-sumer side, it’s almost as difficult to find a U.2 motherboard or drive as it was to find SATA Express!

OCuLink-2

In 2016, PCI-SIG announced OCuLink-2, bringing PCIe 4.0 bandwidth and a new connector. Ironically, the OCuLink-2 external connector and cable bears a strong resemblance to full-size DisplayPort, bringing us back to the days of Thunderbolt’s Mini-DisplayPort cable.

So far, there has been little discussion of OCuLink-2, but perhaps that’s changing. The aforementioned Tyan server chassis actually appears to use OCuLink-2, supporting either PCIe 3.0 x8 or 16 6 Gbps SAS/SATA drives.

Stephen’s Stance

With the advent of AMD Threadripper and Epyc, we are about to see an explosion of PCIe lanes in the pro-sumer and datacenter market. Although many of those lanes will be taken up by conventional PCIe cards, some will be used for SSD’s (M.2 and U.2) or for external connectivity. This is where OCuLink might finally take off: As an AMD alternative to Thunderbolt for external PCIe peripheral connectivity.

PCI-Sig has had standards for external connections for a while. A few years ago several vendors had external I/O virtualization appliances. While the most successful of these, Xsigo, used infiniband as the interconnect from server to appliance others, names escape me, used cheap PCIe paddleboards that plugged into a server slot and used the then (PCIe 2.0?) external standard. A 2007 article on that first gen stuff: http://www.onestopsystems.com/blog-post/pcie-over-cable-goes-mainstream

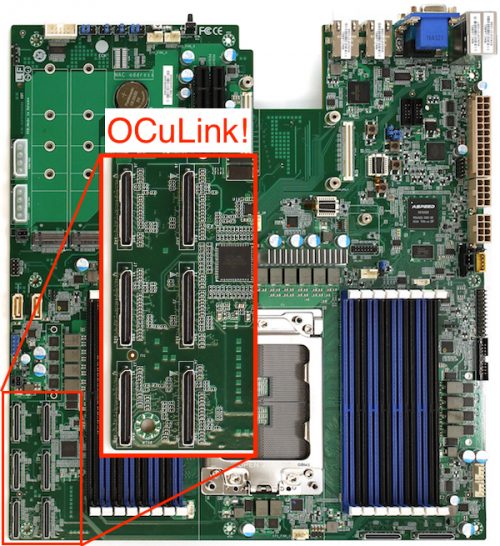

Following your logic that OCuLink and U.2 seemed related, it looks to me like those ports on the Tyan card are intended for motherboard to midplane connections in a software defined storage application. Six, 4-lane connectors would total 24 lanes a short cable could bring to the midplane.

Actually looking at the motherboard more closely, I think I see 8 total OCuLink connectors. There are two more on the lower right corner of the board. Definitely geared towards HDD midplane connections though. Their website says each OCuLink port carriers 8 PCIe lanes, for a total of 64 lanes available for midplane use.

And then Thunderbolt ( finally) decide to open up. I wonder if it had anything to do with OCulink.

So we already have everything for full speed external video card without compromise and propriety connectors?!

We might see a future where video card comes in a nice box like external Sound Card and connects to any device with OCulink from gaming PC to netbooks and even ultra small devices like XPD Win

M2 and U2 are similar enough that one can simply view them as different form factors. A mere passive Adapter can move M2-PCIE to U2. A well known arab living currently in china sells cables from M2 to U2 for €8 per piece or €20 for four pieces. Yes, these are really “just cables” with one M2-plug on one Side and an U2-SFF-plug on the other. So technically speaking nearly every currently sold board is able to use U2 devices with a mere cable.

OCuLink is a quite different beast and far away from consumer products.

So, this is it, OCuLink-2 on ROG G13. Expect third party eGPU docks.

The OCuLink connectors strongly resemble the SATA connectors on a Dell R640 system board. Here’s a photo of one of them:

https://sealevel.info/PXL_20231115_171423702_Dell_R640_SATA_connector.jpg

Does anyone know what those are?