Why do some data storage solutions perform better than others? What tradeoffs are made for economy and how do they affect the system as a whole? These questions can be puzzling, but there are core truths that are difficult to avoid. Mechanical disk drives can only move a certain amount of data. RAM caching can improve performance, but only until it runs out. I/O channels can be overwhelmed with data. And above all, a system must be smart to maximize the potential of these components. These are the four horsemen of storage system performance, and they cannot be denied.

The Nature of Disks

Hard disk drives are getting faster all the time, but they are mechanical objects subject to the laws of physics. They spin, their heads move to seek data, they heat up and are sensitive to shock. Storage industry insiders recognize the physicality of hard disk drives in the name we apply to them: Spindles. And there is no way to escape the bounds of a spindle.

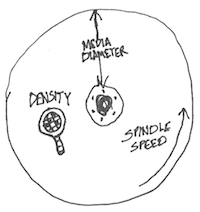

The performance of a hard disk drive is constrained by both its physical limitations and how we use it. Physically, a hard disk drive must spin its platters under a moving arm with a read/write head at the tip. This arm slides across the media, creating a two-dimensional map of data across the disk. Hard disk drives spin at a constant speed, so data at the edge passes under the head quicker than data at the center, creating a distinctive curve of performance.

Although they are random-access devices, hard disk drives cannot access multiple locations at once. Although modern command queueing and processing allows the drive controller to optimize access, I/O operations are serialized before the drive can act on them. It takes a moment for the head to move (seek time) and the disk to spin (rotational latency) before data can be accessed, so sequential operations are much faster than random ones.

Most operating systems lay data out sequentially, beginning at the edge of the disk and moving inward. Although modern file systems try to keep individual files contiguous and optimize placement to keep similar data together, seeking is inevitable. This is the nature of physical hard disk drives.

Accelerating Disks

Disks can be made faster mechanically in one of three ways:

Disks can be made faster mechanically in one of three ways:

- Data can be packed closer together, allowing more to be read in a given amount of time. This is a natural outgrowth of storage density improvements, and explains why today’s slowest hard disks have much better sequential access performance than the fastest enterprise drives of just a few years past.

- Spindle speeds can be accelerated. This has a dual benefit of passing more data under the heads in the same amount of time and reducing the time it takes for the proper spot on the disk to pass the heads. Maximum spindle speed has remained constant at 15,000 rpm for decades, but the slowest drives have accelerated in recent years, with 5,400 now the minimum and 7,200 rpm now common even for portable devices.

- Disk media can be made smaller, reducing the area the heads must travel across. Increasing media density allows for the viability of smaller disks outside volume-sensitive areas like laptops. Many high-speed enterprise drives use small-diameter media even where the outer shell remains at the standard 3.5″ form factor.

Each of these methods are limited by practicality, however. Doomsayers have been declaring that density is reaching the limits of technology for decades, and while the industry has always surpassed these expectations, it can only adapt so far. Spindle speeds beyond 15,000 rpm are impractical as well, especially since this limits the density of storage used. And geometry limits how small hard disk media can get: The spindle itself requires some space, leaving less area for data storage. In fact, it appears that today’s 15,000 rpm 2.5″ hard disk drives are as quick and small as practicality allows. Only increasing density will improve their native performance further.

Combining Spindles

Although they are quick, the mechanical limitations of hard disk drives makes them the first suspect in cases of poor storage performance. A single modern hard disk drive can easily read and write over 100 MB per second, with the fastest drives pushing twice that much data. But most applications do not make this sort of demand. Instead, they ask the drive to seek a certain piece of data, introducing latency and reducing average performance by orders of magnitude.

Then there is the I/O blender of multitasking operating systems and virtualization. Just as each application requests data spread across a disk, multitasking operating systems allow multiple applications and process threads to request their own data at once. File system development has lagged behind the advent of multi-core and multi-thread CPUs, leading to frustrating slowdowns while the operating system waits for the hard disk drive. Virtualization magnifies this, allowing multiple operating systems running multiple applications with multiple threads to access storage all at once.

The key innovation in enterprise storage, redundant arrays of independent disks or RAID, was designed to overcome the limits of disk spindles. In their seminal paper on RAID, Patterson, Gibson, and Katz focus on “the I/O crisis” caused by accelerating CPU and memory performance. They suggest five methods of combining spindles (now called RAID levels) to accelerate I/O performance to meet this challenge. Many of today’s storage system developments are outgrowths of this insight, allowing many more spindles to share the I/O load or optimizing it between different drive types.

The Rule of Spindles

This is the rule of spindles: Adding more disk spindles is generally more effective than using faster spindles. Today’s storage systems often spread I/O across dozens of hard disk drives using concepts of stacked RAID, large sets, subdisk RAID, and wide striping.

Faster spindles can certainly help performance, and this is evident when one examines the varying performance of midrange storage systems. Those that rely on large, slow drives are much slower than the same systems packed with smaller, quicker drives. But the rule of spindles cannot be ignored. Systems that spread data across more spindles, regardless of the capabilities of each individual disk, are bound to be quicker than those that use fewer drives.

Onward: Cache, I/O, and Smarts

The horseman of spindles is harsh, but he does not rule the day. There are many ways to overcome his limits and his three brothers often come into play. These are cache, which bypasses the spindle altogether; I/O, which can constrain even the fastest combination of disk and cache; and the intelligence of the whole system, which limits or accelerates all the rest. We will examine these horsemen in the future!

I’ve been meaning to write this up for a long time. Thanks for listening and commenting!

Very good article ! Thanks

Nice write-up Stephen. One mental issue I have with the shrinking media diameter. If you have less area for the heads to travel across the platter, that’s a good thing.

But there has to be a point where it becomes a bad thing b/c you’re also reducing the surface area that can be covered during a rotation. If you lose diameter faster than you gain density, it’s a net loss, right? Maybe that only applies to sequential, and not random reads / writes. You know more about it, what do you think?

You are correct, sir! There’s a very specific relationship between decreasing media diameter and potential capacity, and the magic point seems to be somewhere around the 2.5″ form factor. Thus the failure of 1.8″ and smaller disk drives.

But an array of zippy microdrives is cool to imagine!

Very good article ! Thanks !

Agreed, well done Stephen. Thank you for contributing this piece.

There’s also the venerable debate about “short-stroking”, where you deliberately sacrifice a disk’s innermost tracks in order to save on seek time. The idea is that you have no control over rotational latency but if you can shave off 2 of the 4 msec typical for a write seek, you can actually get a better price/performance ratio from buying large SATA drives and short-stroking them than you can from buying small, fast (but expensive) high-end drives. I doubt the technique is common in enterprise arrays but long before SSD emerged short-stroking was used by people looking to squeeze every last drop of performance out of mechanical spinning rust.

zippy microdrives will arrive someday. HAMR or other technologies will ensure it happens. It will be interesting to see what will happen as densities sky-rocket. I think we will see some really innovative solutions that will bend cost curves , tolerate multiple failures and reside in much smaller form factors.

This post gets us back to thinking about the nature of disk storage at its most rudimentary level. I think there are so many discussions about storage “features” that we completely forget about storage fundamentals. Fundamentals that include how we store data safely and how we retrieve that same data fast enough to keep our new virtualized environments fed.

I would think about adding vibration to the horseman of spindles topic. The very act of combining spindles means we are also arranging the spindles very close to one another. When we cram all of the drives into drive bays with little thought of how vibration can affect our storage, we, perhaps unconsciously as storage consumers, trade off performance each time a head has a miss and has to re-seek. With higher density and spindle speeds, vibration becomes a detriment to the very performance improvements we seek to achieve.

Also, I think your “Rule of Spindles” is dead on and I think gets lost when we acquire and deploy new storage with the higher capacity drive technologies. I tend to think about this issue in terms of I/O per TB. I recall how many spindles it took to get to 1 TB with 9 GB or 18 GB disk drives (113 and 57 respectively w/o RAID). Now I can get to the same capacity in one disk drive and our disk drive manufacturers have not been able to stuff the same I/O performance in each of these large drives that you would have attained with lower capacity drives.

I look forward to your follow on posts on this subject.