The buzz about Fibre Channel over Token Ring has built rapidly over the last week. Industry experts like Greg Ferro, Denton Gentry, and Joe Onisick have weighed in (as have I), and the Packet Pushers Podcast featured the news in show 12, “Get on the Ring!” Some have called out FCoTR as a foolish hoax, but the FCoTR phenomenon is not foolish. Indeed, FCoTR gives everyone in the industry the chance to reevaluate the current state of the art and has exposed real weaknesses in the Ethernet-centric future of the data center.

The Best Tech Rarely Wins

It has become something of a maxim in technology circles that “the best technology rarely wins.” While many point to the victory of VHS over Betamax, techies often look to the success of CISC over RISC, Windows over UNIX or OS/2, and PC over Macintosh. In every case, it was the plentiful availability of cheap “good enough” products that trumped any apparent technical superiority. And in every case research and development led the purported “inferior” technology to eventually surpass the capabilities of the favorite.

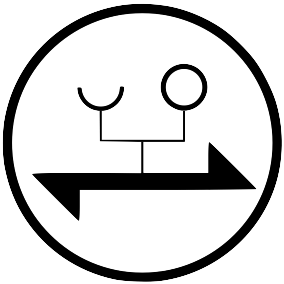

The market failure of Token Ring was an ideal case study for what might be called the Betamax lesson. The token-passing scheme served to enhance both reliability and predictability. Unlike Ethernet, which focused on fault tolerance with “conversational” CSMA/CD, Token Ring took an ordered “Roberts Rules” approach with each station waiting for permission before transmitting. This mechanism could also allow a station to reserve bandwidth for a critical application. Token Ring networks were inherently faster than Ethernet as well, at 4 Mb/s, 16 Mb/s, and eventually 100 Mb/s. But the expense and complexity of cabling caused it to lose favor. This was especially true once 100BASE-TX Ethernet became common: It was fast enough for most LANs and wide support and availability made it incredibly cheap. Gigabit Token Ring was standardized in 2001 but never implemented.

The Elephant in the Room

Ethernet has developed rapidly since it became the de facto data center standard. Gigabit Ethernet ports and switches are common today, and engineering has made it fairly reliable and interoperable in practice. The spread of IP networks also helped Ethernet: Many applications rely on TCP for reliable communication, masking collisions and data link errors even as CSMA/CA and improved DSPs reduced their frequency. The industry is currently shifting again, with 10 GbE becoming more common and 40 Gb and 100 Gb Ethernet standardized and rolling out in high-end switches. Convergence is the word of the day in data center circles, and Ethernet is the heir apparent to rule the converged world. To handle new traffic types (Fibre Channel and RDMA, for example), Ethernet is being extended with prioritization and reservation of bandwidth, advanced congestion management to avoid packet loss, and a mechanism to specify what capabilities are present.

From Star Trek III:

James T. Kirk: Scotty, as good as your word

Montgomery Scott: Aye, sir. The more they overthink the plumbing, the easier it is to stop up the drain. Here, Doctor, souvenirs from one surgeon to another. I took them out of her main transwarp computer drive.

Although interest (and much enthusiasm) in these Data center bridging extensions is widespread, an undercurrent of trepidation is present as well. Storagers worry whether Ethernet is really ready to handle their precious cargo, and networkers are concerned over the proprietary and complex nature of these add-ons. Independent voices also fear the gusto with which vendors are endorsing them, with Fibre Channel over Ethernet (FCoE) becoming a particular object of skepticism. Many see these moves as a land grab rather than a use case-driven expansion.

Stephen’s Stance

We may laugh at the idea of Fibre Channel over Token Ring (FCoTR) in today’s data center, but the concept also exposes real fears about a future data center dominated by converged I/O reliant on Ethernet. The putative features of FCoTR are exactly the weaknesses traditionally seen in Ethernet: Packet loss, congestion, and mediocre hardware make it unsuitable for sensitive payloads like storage. Would a resurgence of Token Ring, engineered with these in mind, really be so bad?

Although data center extensions and “big iron” equipment promise to eliminate these weaknesses, many remain concerned about an Ethernet-dominated data center. At what cost will these enhancements be delivered? Do we really need them? There is also a real technical concern that Ethernet might not withstand another round of bracing and might instead fall over on its crutches. At the very least, data center-class Ethernet is late and overweight. It is wise always to carefully consider which step to take next when so much is on the line.

Stephen,

Another great article and kudus on FCoTR as a whole. The FCoTR buzz you and others have started definitely highlights the real question of whether we are looking at converged networks correctly. I’m a big proponent of FCoE as an access layer consolidation tool but I definitely question it as an end-to-end data center consolidation method.

I also support DCB to enahnce Ethernet’s capabilities and provide tools for convergence, but again as an end-to-end tool is it a solution or a band-aid? We see band-aids for underlying techology issues constantly, Things like Virtual tape and VTLs (backup window too big? Use fast disk acting like slow tape and then offload to tape later.) Is DCB and FCoE just a band-aid?

iSCSI isn’t the answer either, and it comes down to the same reason I question FCoE end-to-end, the issue is SCSI.

We keep relying on this solid but dated unerlying disk access mechanism that was never intended to be networked. Because of this we keep having to over engineer the network itself (FC/FCoE), or end up with complex networks prone to performance issues (iSCSI.)

Maybe it is time to look at alternatives, maybe it’s other network mechanisms, maybe it’s taking a look at SCSI itself and deciding whether or not it’s the right tool for tomorrow’s job.

Halleluja! Thank you thank you thank you! The problem isn’t FCoE, or even Ethernet, it’s that we still stick with an entirely outdated concept of computing. We base everything we do on a monolithic microcomputer model, with captive CPU, RAM, and I/O and run it all on SCSI and outdated file systems.

What we need is a real, actual computer revolution. How about a solid, re-thought protocol for storage instead of another implementation of SCSI?

I’m with you, brother…

Great commentary. The FCoTR meme just gives a camoflauged way to highlight that aspects of “everything on ethernet” aren’t ideal.

Will the FCoE “band aid” lead to a bloated, Rube Goldberg-like Ethernet mess? Probably not. Until it’s proven enough and cheap enough, though, it will face legit resistance, not the least of which comes from other “everything on ethernet” cults, like iSCSI. Weren’t there more iSCSI products at Tech Field Day than FCoE???

Besides, fabrics must ultimately converge on the power cords anyway, they’re the thickest cables.

I’m all for a storage “revolution”. To an application, external storage is just “Tier 2 DRAM”. Why does storage go through hurdles to present itself as a hard drive?

Disclosure: I work for HP.

There is a trend amongst datacenter operators who need large amounts of storage, of building their storage infrastructure using large collections of commodity parts. Facebook’s Haystack, the Backblaze POD, and Google’s GFS are all examples of this. The economic benefits of using commodity parts are compelling.

To put it more succinctly: when there is a mismatch between Fibre Channel and Ethernet, it is Fibre Channel which gets tossed out.

Disclosure: I work for Google, but my nerdy opinions are my own.