This is a follow-up to my story, De-Duplication Goes Mainstream

Although deduplication of storage is nothing new, with Data Domain and other making hay with the technique for years, it has never been ready for prime time – reduction of active primary storage applications like email and databases. Instead, deduplication has been relegated to second- or third-tier status, deduplicating archives and backup data. But change is in the air, and deduplication vendors are starting to bustle towards the bright lights of primary storage.

Stone Knives and Bear Skins

We have all been here before, of course. Back at the dawn of the personal computer era, data compression was a hot topic of conversation. I recall being so impressed by an article in Byte (1986:5, p99) outlining Huffman coding that I tried cooking up an implementation in Atari BASIC. Lossless compression has a magical pull to the geek in many of us – redundant data just wants to be eliminated!

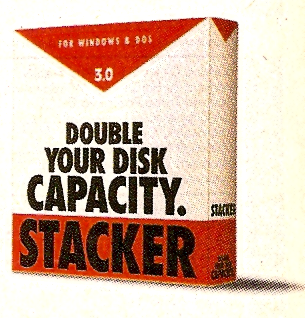

Companies soon applied compression to primary storage, especially the limited storage in personal computers. Stacker was a hit after 1990, until Microsoft built a workalike, called DoubleSpace, into DOS 6.0 in 1993, leading to a historical lawsuit. I personally used the ADDSTOR disk compression built into DR-DOS 6.0 to stretch two more years out of the 20 MB MFM hard drive in my AT&T PC6300 at WPI.

But something funny happened in the late 1990s: Compression began to lose its luster. Compressing data always takes quite a bit of CPU power, but this was offset somewhat by the truncated data transfers and more-efficient file system layout afforded in early PCs. But as disks got larger and faster, using precious CPU time to save space seemed less and less compelling. Today, although nearly every operating system includes built-in compression of files, folders, or perhaps disks, these features are rarely used. And compression was never popular in the performance-sensitive enterprise space.

Deduplication Has a Nice Ring

Although traditional fine-grained compression has not been very successful in the enterprise, its lanky cousin, single-instance storage, has long found niche jobs. Applications from databases to email systems to file servers have long had the ability to recognize to requests to store the exact same file or record, and to store just a single instance in this case. Even file systems have the ability to do single instance storage through the use of links, though this is initiated by the user rather than in an automated fashion.

In the late 1990s, FilePool began developing a content-addressable storage device, which was acquired by EMC in 2001. This device, later known as the Centera, was one of a number of storage platforms targeted at the archiving market introduced this decade. At the same time, virtual tape libraries made the jump from the mainframe to open systems. Both devices, being outside the critical path of performance but offering massive capacity, were well-suited to implement advanced capacity optimization technologies that combined the concepts of compression with single-instance storage. Thus was created the modern world of data deduplication.

What we think of as deduplication is neither fish nor fowl: It assesses larger “chunks” of data than compression technologies, delivering greater capacity savings and potentially reducing performance impact, but is more flexible than single-instancing, recognizing the similarities within files or objects.

But it is still maddeningly difficult to scale deduplication while maintaining performance. Rather than fight to maintain reasonable write throughput, most deduplication products have switched to post-processing, deferring their work to quieter times.

It’s Not Just for Breakfast

Regardless of their methods or underlying technology, no deduplication vendor has stood up to support challenging low-latency or high-throughput production applications, however. NetApp was the first to raise the issue of support for production applications, but although they tout the technology for VMware, they haven’t exactly been shouting from the rooftops to get their A-SIS deduplication technology deployed in other high-I/O applications. And I haven’t seen Hifn’s card yet.

Yesterday, I mentioned that greenBytes was adding deduplication to their ZFS-based storage array for primary data. And now Riverbed has fired another shot over the bow, repurposing their (deduplicating) WAN accelerator product for primary (file) storage. They might be able to pull it off, too, since they have a long list of customers who are already enjoying the technology in production. It’s not a stretch to suggest that Riverbed’s appliances can scale to handle production data loads. Although it’s file-only, I can imagine quite a few scenarios where this tech could really yield benefits. Could we come full-circle, with deduplication finally reaching the enterprise storage world?

Stephen – any thoughts on IBM Real Time Compression for active primary data? Many clients now use it with success in high IO environments… the SVC install base is massive and those folks have aggressively looked at it since it came out in June for block data. Contact me if you want references or more details.